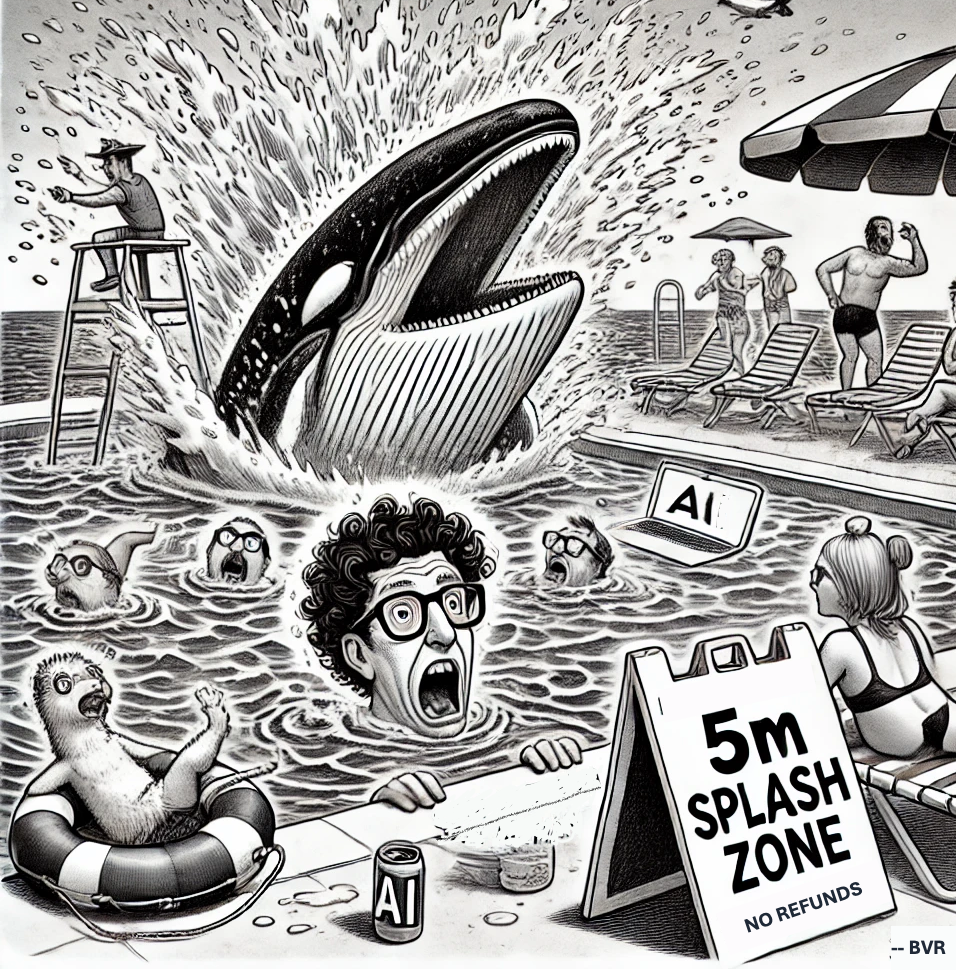

The DeepSeek Aftermath: Reassessing the “Biggest Splash” in AI

After posing five claims about DeepSeek last weekend, the world changed again.

Last Sunday, I wrote “DeepSeek: The Biggest Splash in AI Right Now”, focusing on five claims about a small Chinese startup’s alleged cost breakthroughs, security dilemmas, and the precarious state of US export controls. When I woke up Monday morning, it felt like the world had changed, and DeepSeek mania hit a fever pitch.

A week later (that’s six months in AI years), the dust is starting to settle—but the echoes of DeepSeek’s revelations are still reverberating through AI circles. Much has happened since, and it’s time for a deeper post-mortem.

Below, I circle back to those five claims and where they stand today: which ones are shaping up to be accurate, which are overblown, and which have become thornier than we ever expected.

1. DeepSeek Is Misleading the Costs to Train Its Models

Initial Claim

DeepSeek publicly stated that it trained a GPT-4-grade model (DeepSeekV-3) for just $5.5 million. Given that OpenAI, Anthropic, and X.AI typically command budgets in the hundreds of millions for similar feats, many experts—and let’s be honest, I was among them—viewed the figure as eyebrow-raisingly low. Capital outlays for any investment (lots of GPUs) versus the allocation to a specific item (e.g. a training run), may be very different numbers.

What We’ve Learned

Massive GPU Ownership: Multiple reports now suggest that DeepSeek’s parent company, HighFlyer, holds at least $500 million worth of GPUs, or a cluster roughly on par with a medium-sized US AI lab. If so, the total capital outlay for training could be far above $5.5 million—even if the company allocates only a fraction of that GPU fleet to each training run.

Industry Convergence on Lower Costs: Despite initial skepticism, key figures like Brad Gerstner (notably an OpenAI investor) and even Dario Amodei, CEO of Anthropic, concedes that training a GPT-4-era model at the $5–$15 million mark is feasible today. We’re in an era of rapid algorithmic optimization (model distillation, mixture-of-experts, better data pipelines), which is slashing training costs across the board.

Verdict

DeepSeek’s $5 million figure probably isn’t the entire story. They’ve likely spent much more in aggregate. Still, that “low-ball” claim isn’t fantasy either; the cost curve has fallen so dramatically that $5 million for a credible, near-frontier model isn’t the impossibility it was a year ago.

2. China Is Making the U.S. Tech Sector Blink

Initial Claim

DeepSeek’s big reveal would force the US heavyweights to second-guess their sky-high spending on data centers, GPU hoarding, and proprietary large language models. The US “AI juggernauts” might—at least momentarily—blink in the face of a Chinese challenger that apparently (a) outspent them in GPU hardware, or (b) simply found a more efficient training recipe.

What Happened

NVIDIA’s Market Jolt: Just 12 hours after my article published, NVIDIA stock dropped an astonishing 17%. Conventional wisdom says that if training costs collapse, the GPU supply might overshoot demand. That was likely investor anxiety more than a fundamental supply-demand meltdown. Indeed, if cheaper training truly unlocks a wave of new AI startups and applications with cheap and higher quality inference, GPU usage could increase as more players enter.

Billion-Dollar US Valuations: The rumored $300–$340 billion valuation for OpenAI, plus continuing major deals from Anthropic, Microsoft, and Google, suggests the US labs remain bullish. They’re certainly not slamming the brakes. If anything, they’re doubling down on new models, advanced data centers, and user-friendly commercial products.

Verdict

Yes, DeepSeek rattled the US market—briefly. But the shock is wearing off for now. American AI giants have not abandoned their spending sprees. The biggest shift so far is heightened caution and scrutiny: Are their capital investments truly placing them years ahead, or only months? The US is blinking, but it sure hasn’t walked away from the table.

3. China Is Pulling a TikTok 2.0…but for Corporations

Initial Claim

I posited that DeepSeek’s trifecta—(1) providing drastically cheaper APIs, (2) open-sourcing core models, and (3) being Chinese-based—might echo the TikTok phenomenon: a foreign platform offering near-viral utility but laden with potential data-privacy pitfalls.

Where We Stand

Enterprise and Government Security Fears: DeepSeek’s enterprise-oriented apps and developer API soared to #1 on the Apple App Store in the “productivity” category for nearly two weeks, inviting a barrage of inbound data from all over the globe. Some researchers claimed that user data might be forwarded (or at least stored) with minimal encryption. Cybersecurity firm Wiz published last Wednesday findings that DeepSeek exposed over a million lines of sensitive internal data, including user chat histories, API secrets, and backend operational details, according

US Government Reaction: The US DoD, and in some cases entire government agencies, appear uncertain how to respond. One major DoD CIO told me they weren’t sure who in the new administration was empowered to issue a ban—symbolic of the rapid policy vacuum when key personnel are still being hired. Meanwhile, some agencies tried immediate, sweeping bans—leading to confusion as to whether the ban covers all DeepSeek releases (including the downloaded open-source versions).

Verdict

The “TikTok 2.0 for corporations” fear looks increasingly valid. With widespread usage and uncertain data governance, DeepSeek is a major security wildcard. We’re witnessing a slow-motion scramble among IT teams, compliance officers, and government agencies to figure out the real scope of the threat—and who must lead the response. So yes, it’s not just hype.

4. The Big Proprietary Vendors Are Toast

Initial Claim

If a Chinese upstart can match or surpass the best of OpenAI and Anthropic at a fraction of the cost—and then open-source the result—could that decimate the proprietary AI model business model?

Recent Developments

Proprietary Players Fighting Back: Far from throwing in the towel, OpenAI, Microsoft, Mistral, and Google have responded by slashing inference costs, launching lighter “distilled” versions of their top-tier models, and wooing enterprise clients with custom solutions and compliance frameworks. The early signals show that vendors believe customers still value reliability, brand trust, and integration support—areas where older, deep-pocketed players can deliver.

Open-Source Gains, But Not Dominance: Yes, some smaller organizations jumped to open-source alternatives, thanks to cost savings and flexible licensing. However, certain specialized tasks—particularly advanced code generation and large-scale reasoning—often remain best served by the largest proprietary models. And as models incorporate more specialized training (like domain-specific data or enterprise knowledge graphs), closed vendors’ resources and partnerships often lead to better-tailored solutions.

Verdict

They’re not toast. But they feel the heat. What’s changing is the speed at which open-source and cheaper commercial models keep catching up. Proprietary vendors might still maintain a lead in certain specialized categories—but that margin may be tightening, not widening – every quarter.

5. US Export Controls Are Not Working

Initial Claim

If DeepSeek truly used thousands of advanced GPUs to train a new frontier model, US export bans did little to stop it. Either DeepSeek’s performance claims are overblown, or the American approach to restricting technology is borderline ineffective.

What We’ve Seen

Black Market Flow: Reports confirm that the black market in advanced GPUs is healthy, with the likes of H100 and A100 showing up in China at times for even lower prices than in the US.

Loopholes and “Grey” Channels: Some chips were purchased outright before the ban. Others arrived through third-party brokers and in countries with looser or no export restrictions.

Proxy Servers: It’s fairly easy for any country set up servers (real or virtual) in the U.S. as an end around to get access to and go around.

Domestic GPU Production: China-based heavyweights, from Huawei to Alibaba, are ramping up domestic chip designs (some geared for training, others for inference). They’re not exactly at parity yet, but each iteration narrows the gap.

Impact

Short-Term vs. Long-Term: In the short term, it’s plausible the US can inconvenience China’s biggest AI labs, especially if they target large-scale shipments. But as we keep seeing, talented labs only need tens of thousands of GPUs to get a frontier model off the ground—and that’s entirely feasible via partial domestic supply and smuggling channels.

Symbolic Wins, Practical Limitations: The US might “successfully” block the direct sale of millions of top-tier GPUs to one or two well-known Chinese labs. But it’s extremely difficult to prevent a patchwork approach where thousands of GPUs trickle in from global sources.

Convergence: As training efficiency skyrockets, it’s not clear that “millions of GPUs” will ever be strictly necessary, undercutting the entire premise that controlling large GPU shipments secures a US lead.

Verdict

While certain aspects of US export controls slow China’s AI progress, they’re far from a silver bullet. It’s a game of diminishing returns: the US invests more in policing, while clever brokers and domestic GPU alternatives fill the gap. DeepSeek is living evidence that China can still muster the resources to train advanced AI models—export rules notwithstanding.

Final Thoughts

At the end of the day, DeepSeek did exactly what I said it might: it forced everyone—investors, policymakers, US labs—to rethink their assumptions about the cost, security, and global power dynamics of advanced AI. Whether you view DeepSeek as a cunning Trojan horse, a plucky underdog, or just a well-funded team with a flair for sensational press, there’s no denying its impact. So, are we left in an era of unstoppable Chinese AI ascendancy and toothless US policy? Not exactly. The bigger picture is more nuanced:

China “Blink” Factor: The US still has massive lead-labs, immense enterprise buy-in, and some of the best AI talent on Earth. A fleeting investor panic doesn’t equate to a total advantage shift.

Data and Corporate Adoption: As training costs plummet, top-tier AI models are becoming less about who can train the biggest model and more about who has the best data, the smoothest pipelines, and the broadest enterprise relationships. OpenAI’s rumored $300+ billion valuation is as much a bet on brand, ecosystem, and distribution as on pure model performance. Or, they just figured out AGI.

Security and Regulatory Realignments: The “TikTok 2.0” effect is a real policy conundrum. Washington is only now grappling with the widespread adoption of a Chinese-run reasoning engine. If you found the last few years of TikTok-based data concerns messy, buckle in — free AI apps from China and other countries may make it far more complicated.

Post-Export Control: US policymakers are finally reckoning with the reality that hardware restrictions alone won’t contain AI expansions. We might see a renewed push for intangible controls, from limiting training data access to regulating advanced model distillation. But pulling that off is a far more delicate, global game than banning microchips.

The best takeaway? This space is accelerating too quickly for any single narrative to hold for long. The US, China, other countries, megacorporations, and open-source communities are all leveling up in parallel. Don’t blink, or you might miss the next shift—because in AI, the ground never stops moving.

Videos, articles, and recommended readings:

After last week’s post, I ended up on The Will Cain Show a week after it debuted. Awesome to go from blog to the highest-rated daytime talk show on Fox News in less than 48 hours.

DeepSeek Debates: Chinese Leadership On Cost, True Training Cost, Closed Model Margin Impacts. Dylan Patel and his firm SemiAnalysis wrote an absolute banger with their assessment of all things DeepSeek. He’s the best analyst in the game, and amazing to see he made Lex Friedman’s podcast this week. Hayden Field, of CNBC, provides a nice overview of Dylan’s post.

Meta Engineers See Vindication in DeepSeek’s Apparent Breakthrough. Cade Metz and Mike Isaac do a great job covering Meta’s role in the DeepSeek/open-source moment. Meta is instrumental and has played and will continue to play a central role in the progress of AI.

On DeepSeek and Export Controls. Dario Amodei, CEO, Anthropic. Dario lays out a number of aspects of the DeepSeek cost estimates and does a great job discussing how training models get cheaper on several fronts. He also covers his take on export controls. I appreciate his perspective but disagree with his position.

Supporting facts:

Wiz researchers find sensitive DeepSeek data exposed to internet

As OpenAI blocks China, developers scramble to keep GPT access through VPNs

ByteDance is secretly using OpenAI’s tech to build a competitor

OpenAI has evidence that its models helped train China’s DeepSeek

Mistral Small 3 brings open-source AI to the masses — smaller, faster and cheaper