When Logs Turn Toxic

Why OpenAI’s forced data retention should alarm every enterprise and government agency

Imagine every message you’ve ever sent to your favorite AI tool — every draft email, military insight, raw business idea, snippet of confidential source code, or even sensitive personal matters—being held indefinitely in some vast digital vault for all of time. It sounds dystopian, but due to a recent court order against OpenAI, it's now a reality.

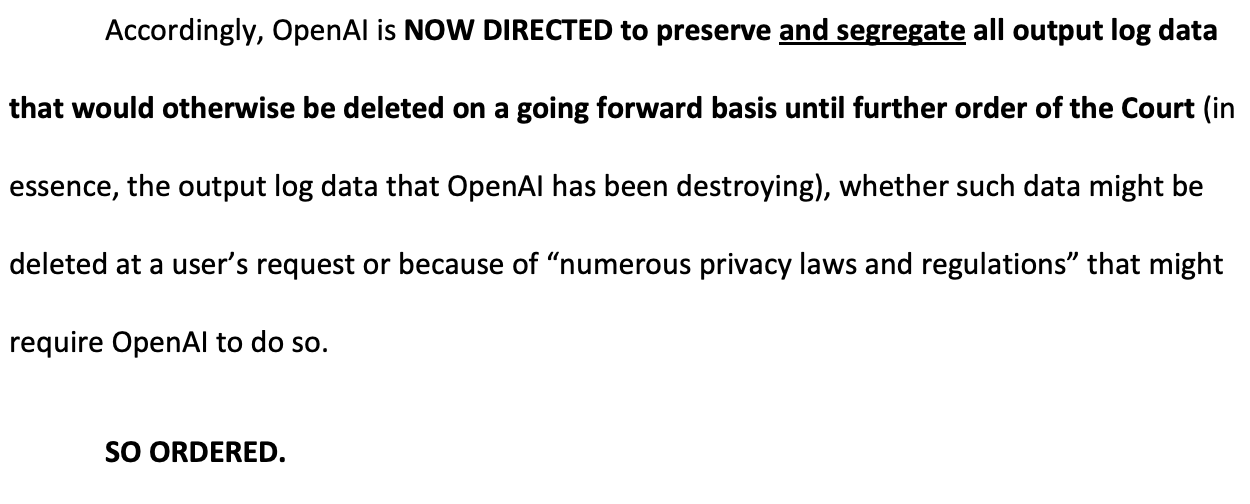

A few days ago, the press broke regarding a May 13 court order, where a federal judge ordered OpenAI to preserve all ChatGPT logs indefinitely. This ruling came amid ongoing litigation by major news organizations, led by The New York Times, alleging copyright infringement. Previously, OpenAI deleted logs after 30 days (standard in the industry) unless users explicitly chose otherwise. Now, every casual or deeply sensitive chat you've had is archived indefinitely.

This mandate applies to everyone using ChatGPT’s free, Plus, or Pro versions, along with standard API users. Fortunately, ChatGPT Enterprise and certain API customers with "Zero Data Retention" contracts are exempt. But for millions, including countless corporate and government personnel using the consumer versions of ChatGPT, the implications are serious—and escalating.

The risk of the forced ChatGPT log retention doesn’t exist in a vacuum—it follows a line of cases where data environments became strategic vulnerabilities. Take TikTok: U.S. lawmakers and intelligence agencies have long raised alarms that its China-based owner, ByteDance, could be compelled to hand over U.S. user data under China’s National Intelligence Law. This led to bipartisan moves now requiring TikTok to spin off or face a nationwide ban amid national security fears. Similarly, the Ashley Madison data breach exposed personal and even payment information for 36 – 37 million users in 2015. Many users had paid to delete their profiles, only to discover their data was never removed. And when U.S. officials determined that the Chinese-controlled dating app Grindr posed a national security risk due to its sensitive personal data, the Committee on Foreign Investment in the U.S. (CFIUS) forced Kunlun to sell its majority stake in 2020. These episodes underscore a crucial truth: when data becomes accessible long-term, through agency or accident, it turns into a national vulnerability.

So, what groups are most at risk?

The Youth - Younger generations, especially Gen Alpha and Gen Z, have embraced ChatGPT as more than an assistant—it’s a confidant. Recent studies by Chegg indicate roughly 80% of students globally use GenAI tools like ChatGPT. They entrust AI with emotional anxieties, relationship advice, and career guidance, turning it into a therapist, friend, and advisor. Now imagine these same users in government roles, casually interacting with GenAI tools to brainstorm ideas or strategize informal solutions—each interaction now a potentially indefinite liability. The line between personal, professional, and governmental becomes dangerously blurred.

The Lazy Masker - Within large and secure enterprises and the government, ChatGPT Enterprise is not used, or allowed, but employees fall back on the consumer product. To make matters worse, the practice of "lazy masking"—minimally editing sensitive content before submitting it to AI tools—is an especially acute problem. It’s disturbingly easy to imagine scenarios where a well-meaning official shares partially redacted sensitive data, defense strategies, or internal policy deliberations. Minimal edits may seem harmless, but context can reveal more than intended. For instance, a government analyst might remove names and dates from a sensitive brief but leave intact enough detail that adversaries or unauthorized parties could deduce critical insights. The indefinite retention order amplifies these accidental leaks exponentially.

Third-party tool users - Even if an agency explicitly prohibits direct use of ChatGPT, indirect exposure remains through various SaaS tools using OpenAI. While many companies (hopefully) have secure terms in place, the supply chain around many AI tools is still developing and may be suspect. These hidden dependencies magnify exposure. Your data security isn't solely an internal policy issue—it's intricately tied to your vendor ecosystem.

Pretty much everyone of the 400 million weekly active users. Like, what the actual heck are you thinking Southern District!

This issue isn’t merely about inconvenience; it’s about fundamental security and privacy. Short-term log retention is practical for misuse prevention and safety enhancement. But indefinite retention dramatically escalates risks. What was a manageable risk for a 30-day log becomes an inviting target for malicious actors when stored indefinitely, posing significant cybersecurity threats.

For government agencies, particularly those involved in national security, the implications are even graver. Conversations involving sensitive but informally discussed information, insights, hypothetical scenarios, and internal analyses are now subject to indefinite retention, creating unprecedented exposure risks.

Towards Responsible AI Policies

OpenAI’s challenge to this ruling deserves broad support. Indiscriminate data retention threatens fundamental privacy and national security principles. AI interactions should enjoy confidentiality standards equal to secure communications within sensitive sectors.

Regardless of copyright lawsuits, we must advocate for robust regulations prioritizing data minimization and strictly limiting unnecessary retention. As AI becomes deeply integrated into personal, professional, and government contexts, protecting privacy and enabling secure communication becomes paramount.

Without deliberate action, every informal strategic thought, confidential briefing, and hastily redacted document shared with AI may become indefinitely vulnerable, posing severe risks we can ill afford.

Ben Van Roo is CEO of Legion Intelligence, specializing in secure, self-hosted agentic AI for mission-critical and government environments. He writes extensively on AI, enterprise strategy, and national security.